A New Way Forward: Psychedelic Therapy for the Treatment of Mental Illness

Post by Laura Maile

The need for effective treatments for mental illness

An estimated 26% of adults in the United States suffer from depression, anxiety, or other related mental health disorders, and around 20% of patients don’t respond to standard treatments. Major depressive disorder (MDD) is a substantial public health burden, with an estimated $210 billion burden every year in the US alone. Drug treatments for MDD usually require weeks to reach effective levels, and many who take such treatments do so indefinitely. Along with the adverse side effects reported for many drugs used to treat depression and anxiety, the cost burden to patients and the public highlights the critical need for improved treatments.

What are psychedelics?

There are many synthetic and naturally occurring substances categorized as “psychedelics,” which are defined by their ability to alter consciousness, perception, cognition, and mood, usually through action at serotonin receptors. Classic psychedelics including psilocybin, mescaline, N, N-dimethyltryptamine (DMT), and lysergic acid diethylamide (LSD) bind to a variety of receptors, but exert their psychoactive effects by binding to a specific type of serotonin receptor, the 5-HT2A receptor, which is mostly located on excitatory neurons in the cortex. Binding to these specific serotonin receptors leads to intracellular signaling cascades that ultimately lead to synaptic plasticity and increased activity of neurons in regions of the brain associated with cognition, attention, emotion regulation, and sensory perception. Scientists don’t yet fully understand the specific mechanisms that lead to the acute effects of the psychedelic experience, nor the long-term effects of their use in treating mental health disorders.

What’s the current state of research on psychedelic psychotherapy?

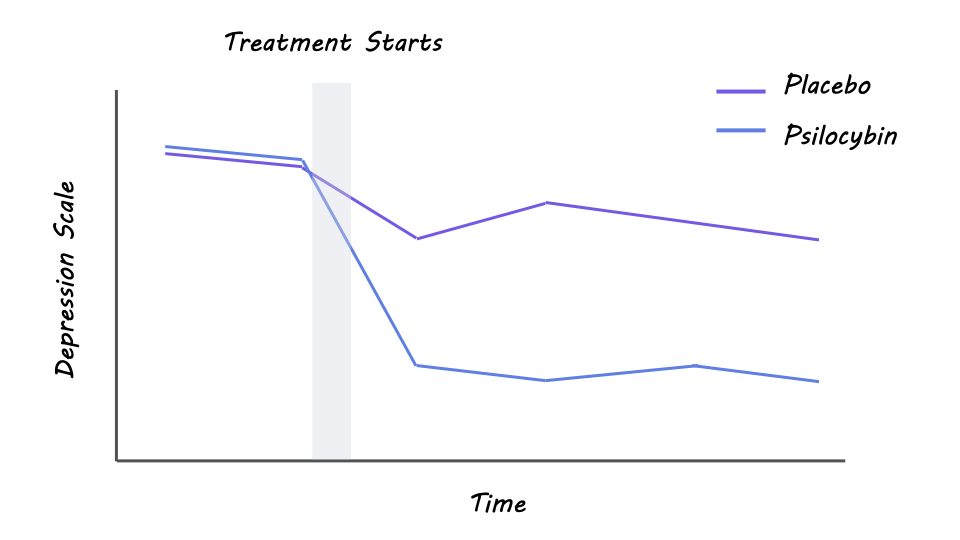

There have been several studies that support the high therapeutic potential of psychedelics in the treatment of depression, anxiety, alcohol-use disorder, substance-use disorder, and post-traumatic stress disorder. Evidence comes from both surveys of psychedelic self-administration and small-scale clinical investigations with controlled administration and medical supervision, usually in conjunction with psychological support or therapy before, during, and after the treatment sessions. In 2021, Davis and colleagues published a randomized clinical trial of 24 participants with MDD, showing that just two treatment sessions of psychotherapy-assisted administration of psilocybin led to significant improvement in symptoms in 71% of participants, and full remission in 54% of participants one month later. Ross and colleagues demonstrated similar findings in another small-scale randomized placebo-controlled trial focusing on the treatment of anxiety and depression in cancer patients. In this study, between 60-80% of patients saw continued relief from anxiety and depression symptoms 6.5 months following a single dose of psilocybin combined with psychotherapy. Another study published in 2022 by Holze and colleagues tested the effects of LSD psychotherapy in 42 participants with anxiety, both with and without a life-threatening illness. In this study, participants experienced two treatment sessions with LSD and two with placebo, and most experienced reduced anxiety and depression symptoms 16 weeks later. Overall, this research suggests that psychedelic therapy can be effective in treating mental illness, even after just a couple of treatments.

Results from a randomized clinical trial (von Rotz et al., 2022)

What does the future look like?

Recent studies in rodents indicate that some of the beneficial effects of psychedelics for depression treatment may depend on the TrkB receptor, not serotonin receptors. More research is needed to understand further the mechanism of action that underlies the beneficial effects of psychedelics. One concern regarding psychedelic therapy is the potential side effects - more research is needed to determine whether existing psychedelic drugs can be chemically altered to target receptors that will limit the hallucinogenic effects while maintaining the beneficial antidepressant effects. While past clinical trials have indicated the potential benefits of using psychedelics to treat mental illness, additional large-scale randomized clinical trials are needed to ensure the safety and efficacy of using various psychedelic drugs for treatment-resistant depression, anxiety, and other mental illnesses, especially for individuals already taking medications like antidepressants. These include studies on 5-MeO-DMT, a component of Sonoran Desert Toad venom that produces an intense psychedelic experience that lasts between 5-20 minutes, which is much shorter than the hours-long effects of LSD and psilocybin.

The takeaway

Reviews of many recent clinical studies indicate that psychedelics have huge potential as an alternative treatment for depression, anxiety, and other mental health disorders. When administered in a safe environment in combination with psychotherapy, psychedelics can result in long-term reductions in depression and anxiety symptoms, with few adverse effects reported. More large-scale clinical trials are needed to assess the safety and efficacy of these treatments in a more diverse pool of patients. The research and development of approved psychedelics is an important ongoing effort that may have substantial impacts on the personal, social, and economic burdens of mental health disorders worldwide.

References +

Carhart-Harris et al. Psylocibin with psychological support for treatment-resistant depression: an open label feasbility study. 2016. The Lancet Psychiatry.

Davis AK et al. Effects of Psilocybin-Assisted Therapy on Major Depressive Disorder: A Randomized Clinical Trial. 2021. JAMA Psychiatry.

DiVito AJ et al. Psychedelics as an emerging novel intervention in the treatment of substance use disorder: a review. 2020. Mol Biol Rep.

Holze F et al. Lysergic Acid Diethylamide-Assisted Therapy in Patients With Anxiety With and Without a Life-Threatening Illness: A Randomized, Double-Blind, Placebo-Controlled Phase II Study. 2022. Biological Psychiatry.

Kocak, D.D., Roth, B.L. Examining psychedelic drug action. 2024. Nat. Chem.

McClure-Begley et al. The promises and perils of psychedelic pharmacology for psychiatry. 2022. Nat Rev Drug Discov.

Moliner R et al. Psychedelics promote plasticity by directly binding to BDNF receptor TrkB. 2023. Nature Neuroscience.

Ross S et al. Rapid and sustained symptom reduction following psilocybin treatment for anxiety and depression in patients with life-threatening cancer: a randomized controlled trial. 2016. J Psychopharmacol.

Von Rotz et al. Single-dose psilocybin-assisted therapy in major depressive disorder: a placebo-controlled, double-blind, randomised clinical trial. 2022. eClinical Medicine.