Neuroimaging Features Help Predict Treatment Outcomes for Major Depressive Disorder

Post by Meagan Marks

The takeaway

Neuroimaging data shows great potential in predicting treatment outcomes for patients with major depressive disorder, which can help clinicians choose the most effective treatment option.

What's the science?

Major depressive disorder (MDD) is a mental health condition that is very prevalent and challenging to treat. While a handful of treatment options are available for MDD, their effectiveness varies from person to person. Clinicians currently use various clinical features to choose a treatment for a given patient, yet 30-50% of patients don’t respond well to initial treatments, leading to a trial-and-error approach where different options are tested over several weeks or months to find the most effective one.

Recent research suggests that neuroimaging assessments – where clinicians scan the brain and analyze the data with machine learning models – may better predict which MDD treatments will work best for a particular patient. This week in Molecular Psychiatry, Long and colleagues review multiple studies to evaluate how well neuroimaging can predict treatment outcomes, which imaging techniques are most accurate, and which brain areas are most useful for prediction.

How did they do it?

To gain a more comprehensive understanding of how neuroimaging data can predict treatment success for patients with MDD, the authors conducted a meta-analysis, examining combined data from over 50 treatment-prediction studies. The authors first selected which studies to analyze based on predefined criteria. Ultimately, the authors included 13 studies on pretreatment clinical features (4,301 total patients) and 44 pretreatment neuroimaging studies (2,623 total patients).

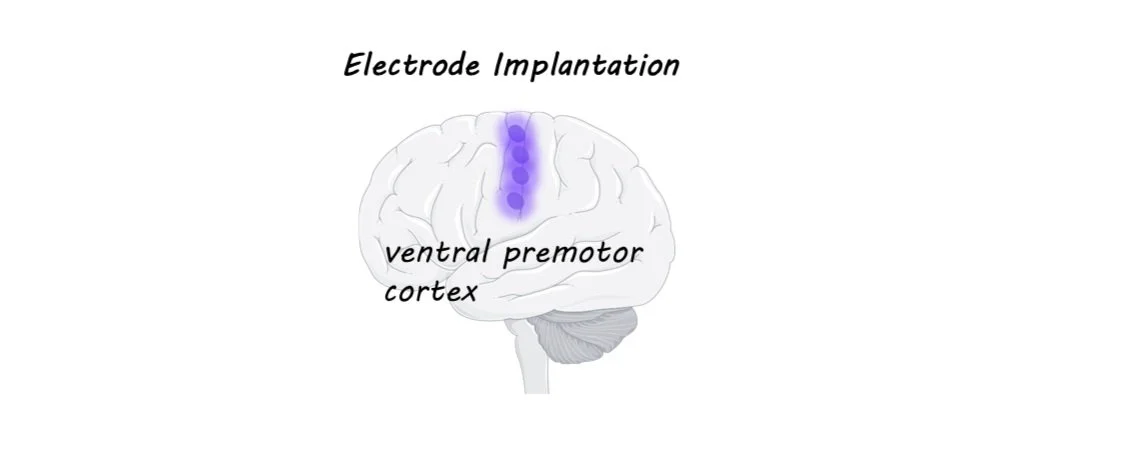

The authors then extracted and combined key data from each study, running a series of statistical tests to evaluate whether pretreatment clinical features such as mood-assessment scores and patient demographics, or neuroimaging features such as brain region structure and activity were better predictors of successful treatment outcomes. They also assessed which imaging modalities (resting-state fMRI, task-based fMRI, and structural MRI) most accurately predicted patient responses to electroconvulsive therapy (ECT) or antidepressant medication treatments, and which brain regions correlated to the success of these treatments.

What did they find?

Following their analysis, the authors found that pretreatment brain-imaging features were more effective than clinical features at predicting patient responsiveness and treatment success. Specifically, resting-state fMRI demonstrated greater sensitivity to predictive variables and most accurately identified which patients were likely to benefit from particular treatments. The neuroimaging results revealed that key predictive brain regions were predominantly in the limbic system and default mode network, brain networks that are known to be involved in depression. Notably, alterations in various brain regions within the limbic network were associated with either antidepressant or ECT success, whereas brain regions within the default mode network were primarily linked to antidepressant efficacy.

What's the impact?

This study found that neuroimaging data can reliably predict which treatment options are most effective for patients with MDD, highlighting which imaging modalities and brain regions are best at estimating treatment success. This research could help clinicians accurately identify which patients are most likely to respond to specific treatments, allowing them to consider alternative options when necessary. Additionally, these findings could inspire further research into how neuroimaging might be used to predict treatment outcomes for other psychiatric conditions or diseases.