Decoding of Natural Sounds in Congenitally Blind Individuals

Post by Stephanie Williams

What's the science?

Previous work has shown that patterns of brain activity measured with functional magnetic resonance imaging (fMRI) data can be used to classify sounds. Typically, these studies are performed with complex sounds (traffic, nature sounds) as the stimuli, and the classifiers (machine learning models) are built to predict groups of sounds. For example, fMRI could be used to predict whether an individual was listening to traffic noise or to a group of people speaking. One region that can be used for this decoding of auditory information is the early “visual” cortex (V1, V2, V3), which suggests that early visual cortex processes non-visual auditory information. Earlier work on auditory decoding in the early visual cortex was performed in sighted individuals only, leaving open the question of whether the same auditory information could be decoded from the visual cortex of blind individuals. This week in Current Biology, Vetter and colleagues show that sound decoding can be performed in both sighted and blind individuals with similar accuracy.

How did they do it?

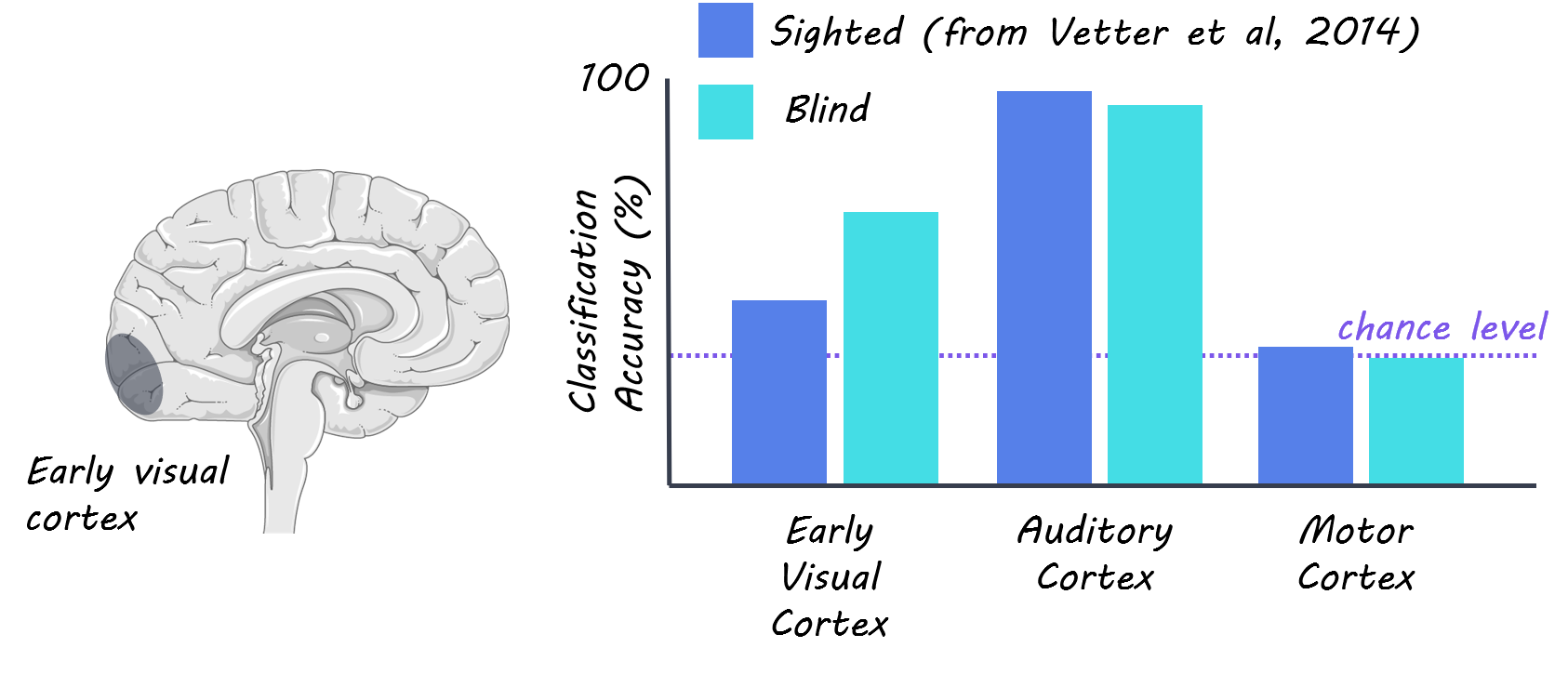

The authors collected fMRI data from 8 congenitally blind individuals while they listened to three different natural scene sounds. The authors compared these data to previously published data (N=10) from sighted individuals, which was collected with similar stimuli and MRI acquisition parameters. The sounds consisted of 1) a bird singing and a stream 2) people talking without any clear semantic information and 3) traffic noise with cars and motorbikes. Participants listened to four rounds (‘runs’) of 18 randomized repetitions of the three sounds. The authors focused their analysis primarily on three visual areas called V1, V2, and V3, and further subdivided these into three eccentricities: foveal, peripheral and far peripheral regions. They also conducted some whole-brain analyses, searching on a voxel-by-voxel basis across the brain, rather than using predefined regions, for voxels that could be used to predict which sounds the subjects were listening to. The authors used multivariate pattern analysis (MVPA) to predict which of the three sounds participants were listening to based on the activity patterns derived from the fMRI data. They trained their classifier on three of the four runs and tested on the left-out fourth run for each subject. They compared their decoding accuracy results from the early visual cortex to the auditory cortex (which acted as a positive control) and motor cortex (negative control). The authors then analyzed how the sounds were represented in the eccentricity pattern across the early visual cortex.

What did they find?

The authors successfully decoded natural sounds from the early visual cortex of congenitally blind individuals, showing that visual imagery and experience is not a prerequisite for the representation of auditory information in the early visual cortex and that there’s a similar cortical organization for auditory feedback in visual cortex between sighted and congenitally blind individuals. The authors saw both higher decoding accuracy for the early visual cortex and lower decoding accuracy for the auditory cortex in the blind group compared in the sighted group. This result indicates that visual deprivation may cause sound representation to be more distributed across the auditory and visual cortex in congenitally blind individuals. When the authors analyzed how eccentricity affected decoding results, they found that they had higher decoding accuracy for peripheral regions of visual cortex compared to foveal regions. This finding is supported by previous research showing that the peripheral visual cortex is connected to many non-visual brain regions. Interestingly, the authors point out that none of the three sounds induced a statistically significant response in the overall brain activity while listening to sounds compared to at rest in any of the 3 early visual areas. This suggests that their decoding accuracy is driven by small activity differences across voxels in each region of interest.

What's the impact?

The authors extend previous work on auditory decoding in the early visual cortex to include blind individuals, showing that there may be a similar organization of auditory information in the early visual cortex of both sighted and blind individuals. This study provides further evidence that the early visual cortex is involved in functions other than the feedforward processing of visual information in both sighted and blind individuals.

Petter et al. Decoding Natural Sounds in Early “Visual” Cortex of Congenitally Blind Individuals. (2020). Access the original scientific publication here.