How Brain-to-Brain Synchrony Between Students and Teachers Relates to Learning Outcomes

Post by Elisa Guma

The takeaway

Synchrony of brain activity amongst students and between students and teachers predicts test performance following lecture-based learning. Furthermore, brain-to-brain synchrony is elevated during lecture segments associated with correctly answered questions.

What's the science?

Social interactions between students and teachers have a profound impact on students’ learning and engagement. Students feel a greater sense of belonging and tend to have better outcomes in synchronous learning (i.e., where students and teacher interact in real-time) versus asynchronous learning (where students view prerecorded lectures). Interestingly, little is known about the brain mechanisms that support this type of learning. Synchronous brain activity across individuals referred to as brain-to-brain synchrony, may play a role. This week in the Association for Psychological Science, Davidesco and colleagues recorded brain activity from students and teachers in a classroom setting to determine whether brain-to-brain synchrony was associated with learning outcomes.

How did they do it?

The authors recruited 31 healthy young adult males and females and two professional high school science teachers (one male and one female) to participate in the study. Students were broken up into 9 groups of 4 and attended four 7-minute teacher-led science lectures covering topics such as bipedalism, insulin, habits and niches, and lipids. To assess the degree of learning, students had to take an assessment comprising 10 multiple-choice questions at 3 different timepoints: (1) pretest: 1-week prior to the lectures, (2) immediate posttest: immediately following each 7-minute lecture, and (3) delayed posttest: one week following the lectures.

Electroencephalography (EEG) recordings were acquired from both students and teachers during each lecture and testing session to measure brain activity in real-time, with high temporal specificity. The data was preprocessed and filtered into three frequency bands: theta 3-7Hz), alpha (8-12Hz), and beta (13-20Hz). This was then averaged into three predefined brain regions of interest based on where the recording electrode was positioned on the head of each participant; this included the posterior, central, and frontal regions of the brain.

To quantify learning outcomes, the authors categorized a question as “learned” if it was answered incorrectly in the pretest, but correctly in either of the posttests, and “not learned” if the student’s answer was unchanged from pre- to posttest. The authors compared brain activity patterns 1) across pairs of students or 2) between students and the teacher to determine whether there was any brain-to-brain synchrony. Next, they evaluated whether the periods of brain-to-brain synchrony during lectures were associated with learning outcomes (pretest-to-posttest change). Finally, they evaluated whether brain-to-brain synchrony was higher during lecture segments that the students successfully learned compared to those they did not learn.

What did they find?

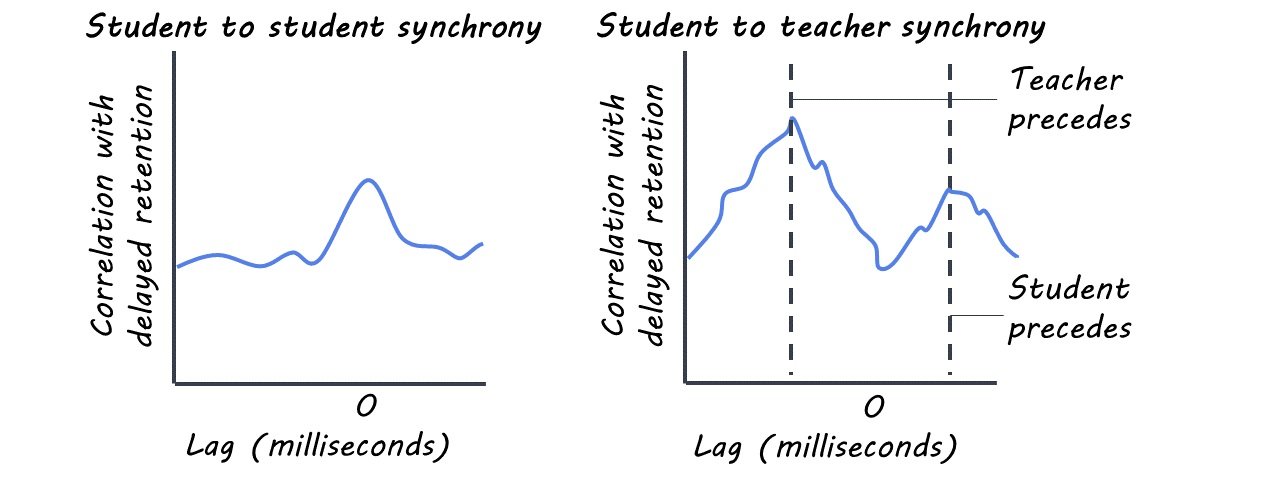

First, the authors found that test performance significantly improved from the pretest to the immediate posttest, as well as to the delayed posttest, but to a lesser extent. Next, they found that there was evidence for brain-to-brain synchrony based on recordings from the central electrodes and on alpha band frequency activity. Interestingly, this synchronous activity predicted both pretest-to-immediate-posttest learning as well as pretest-to-delayed-posttest learning. However, there was no effect when comparing activity in the two posttest sessions to each other. Additionally, alpha-band synchronous activity was higher during lecture segments corresponding to learned versus not learned questions.

Next, the authors found that there was a temporal lag for brain-to-brain synchrony between students and teachers wherein the teacher’s brain activity patterns preceded the brain activity patterns of the students by 300ms. This is likely explained by the fact that the teacher served as the speaker and the students as the listeners. Furthermore, the student-to-teacher brain-to-brain synchrony significantly predicted pretest-to-delayed-posttest learning but not pretest-to-immediate-posttest learning.

What's the impact?

This study extends our understanding of how synchronous brain activity between students and between students and their teachers may be related to learning. Alpha rhythm activity in the central part of the brain is particularly relevant for this type of synchronicity. In future studies, it may be interesting to acquire data from other physiological signals such as heart rate, body motion, or eye movements to see how these might be related to EEG-measured brain activity to support learning.