Modeling Sound Localization in the Brain

Post by Anastasia Sares

The takeaway

Tiny delays in sound between our ears help us determine the location of sounds in space. Using simulations of neuronal activity, the authors compared two models of auditory delay processing and found that a model supported by research in mammals better accounted for auditory delay processing than a model supported by research in other animals (mainly birds).

What's the science?

We don’t often think about it, but our auditory system is extremely good at localizing sound. This is what makes it possible to hear a car driving up behind you, or to automatically reach for your phone when it buzzes. Among the main cues that help us localize sound are inter-aural timing differences (ITDs), where sound arrives at one ear slightly before the other. The differences can be minute (on the order of millionths of a second) and are calculated within the first few synapses of the auditory system—which are extremely difficult to probe and can only be accessed with invasive surgery. Because of this, even though we have known about the importance of these timing cues since at least the 1800s, we still don’t have a complete idea of how they are represented in the human brain. Many psychology courses still teach the Jeffress model, which assumes that the calculation of ITDs is mostly due to differing lengths of axons coming in from either side of the head. This model finds some support in animal research (like in owls), but it is not certain that primates’ auditory systems work the same way.

This week in Current Biology, Undurraga and colleagues combined neuroimaging data and computer simulations to show how inter-aural time delays might be processed in humans.

How did they do it?

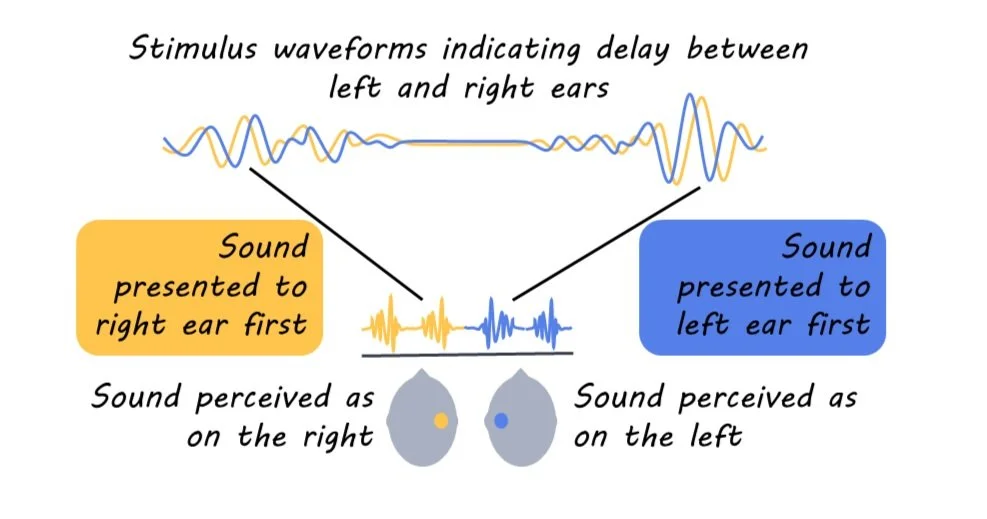

Participants’ electroencephalography (EEG) and magnetoencephalography (MEG) data were recorded while they listened to a noise stimulus through headphones. The noise varied in inter-aural timing difference (ITD) between the two ears. Sometimes, the sound from the left ear was played slightly earlier (simulating a sound coming from the left) and sometimes, the sound from the right ear was played slightly earlier (simulating a sound coming from the right), and there were varied amounts of delay on different trials. The experiment included realistic delay for direct sound coming from a nearby object (500 microseconds) as well as much longer delays that might indicate reverberations from a far-away source (up to 4000 microseconds).

The authors then looked for fluctuations in the brain data that matched the rate of changes in ITD while being insensitive to other changes in the signal (like intensity/loudness or frequency/pitch). These fluctuations in the brain data that are locked to the changing ITD are termed the ITD-following response.

Finally, the authors created simulations of neuronal activity based on different theories about how ITD is processed, one closer to Jeffress’ delay-line model (with more support in bird studies), and one called the π-limit model (with some support in other mammals). They measured how well these simulated neuronal arrays could reproduce the human data.

What did they find?

The ITD-following response appeared in multiple brain areas. Unsurprisingly, the auditory cortex was highly responsive to changes in ITD, but somatosensory regions were also fairly responsive. The right hemisphere responded more than the left. The ITD-following response was strongest at the shortest delay (500 microseconds). However, the response didn’t just fall off at longer delays, there was a strange oscillating pattern, indicating that some delays provoked a bigger response than others.

Simulations indicated that the mammal-like model was more appropriate than the bird-like model in explaining the human brain activity data. In fact, when fitting the bird model to human data, it ended up taking on the properties of the mammal-like model anyway. As for the strange oscillating pattern at very long delays, the authors found it was likely a side-effect: a convergence of responses from neurons that primarily respond to shorter ITDs. The oscillations were sort of like “echoes” of the short-delay neurons’ activity, stretching out to a longer time frame. This means there is probably not a separate dedicated mechanism producing the oscillating delay patterns, though they could still be useful in helping us to detect reverberation patterns in our environment.

What's the impact?

We often use data from animals to understand the human brain. However, this study reminds us that sometimes animal and human brains work differently, and we need to be careful about assuming that the human brain works the same as whatever animal model we happen to be using.